Semi-Automatic Visual Assistant

- The ABILITY Team

- Oct 31, 2025

- 1 min read

Great news!

We’re happy to share that our journal paper is now published in IEEE Access and available on

IEEE Xplore: https://ieeexplore.ieee.org/document/11146787

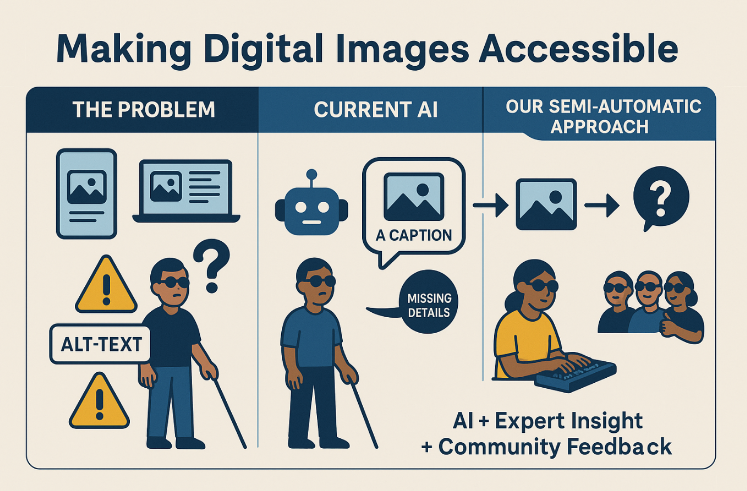

In this work, we explore a semi-automatic, human-centred AI approach for image conversational descriptions that truly fit their target users: Blind and Visually Impaired (BVI) people.

Here is the idea in a nutshell:

LLMs generate initial image-conversation drafts → BVI experts refine what matters most → the AI learns from those refinements to produce better, more relevant conversations → BVI end-users validate the gains in usefulness and satisfaction.

It is a practical recipe for AI that adapts to people, not the other way around—scaling inclusive,

BVI-centred training data and improving real accessibility outcomes.

One more promising step toward the vision of "Symbiotic Intelligence" — where humans and AI learn from each other and co-adapt for the benefit of all.

Comments